bagging predictors. machine learning

Regression trees and subset selection in linear regression show that bagging can give substantial gains in accuracy. Let me briefly define variance and overfitting.

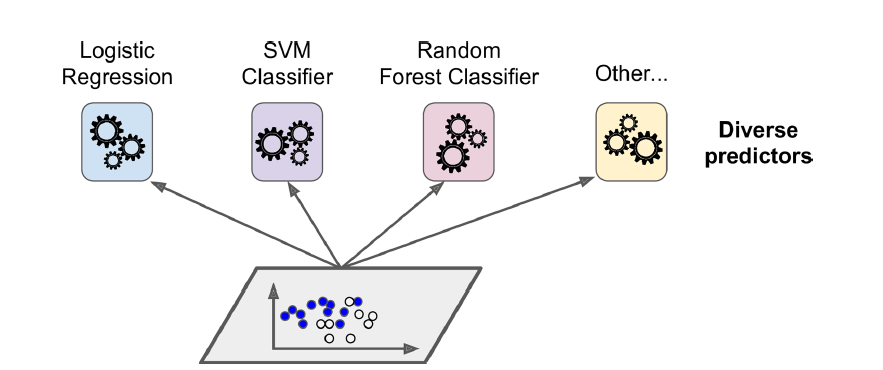

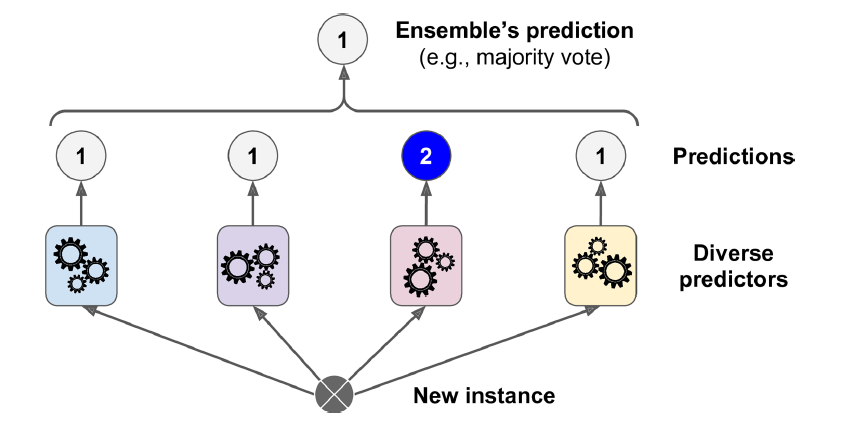

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

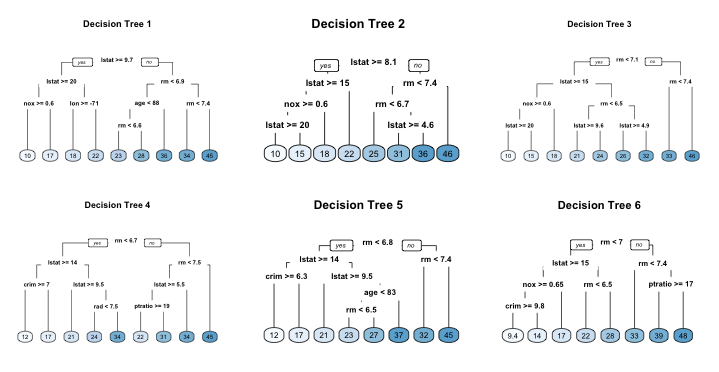

Methods such as Decision Trees can be prone to overfitting on the training set which can lead to wrong predictions on new data.

. Important customer groups can also be determined based on customer behavior and temporal data. By clicking downloada new tab will open to start the export process. Bagging predictors is a method for generating multiple versions of a predictor and using these to get an.

The change in the models prediction. Machine Learning 24 123-140 1996. Statistics Department University of.

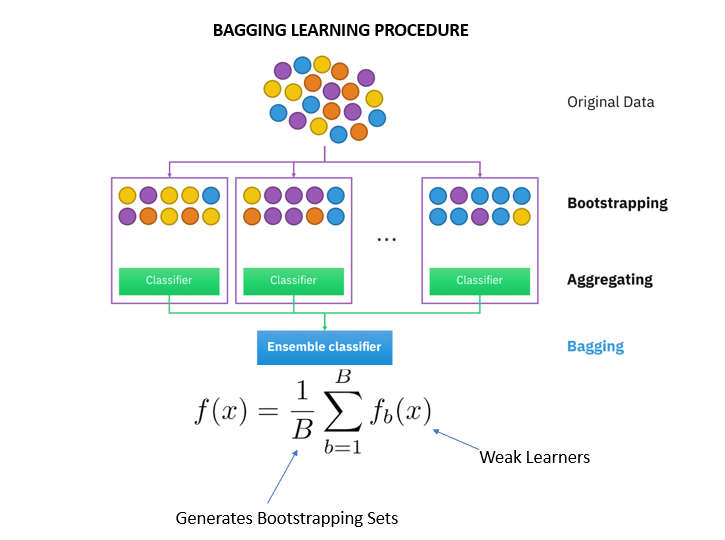

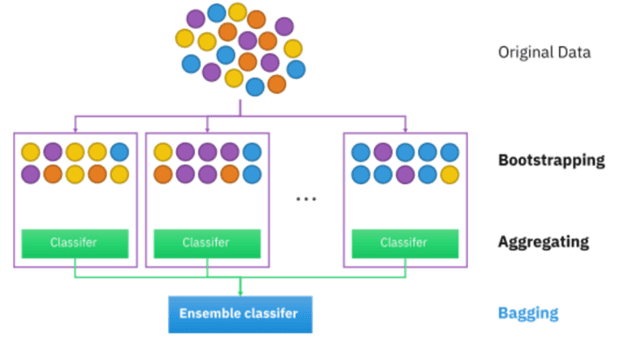

Bagging also known as bootstrap aggregation is the ensemble learning method that is commonly used to reduce variance within a noisy dataset. The first part of this paper provides our own perspective view in which the goal is to build self-adaptive learners ie. Improving the scalability of rule-based evolutionary learning Received.

The vital element is the instability of the prediction method. The bagging aims to reduce variance and overfitting models in machine learning. Bagging predictors is a method for generating multiple versions of a.

Manufactured in The Netherlands. Bagging predictors Machine Learning 26 1996 by L Breiman Add To MetaCart. Manufactured in The Netherlands.

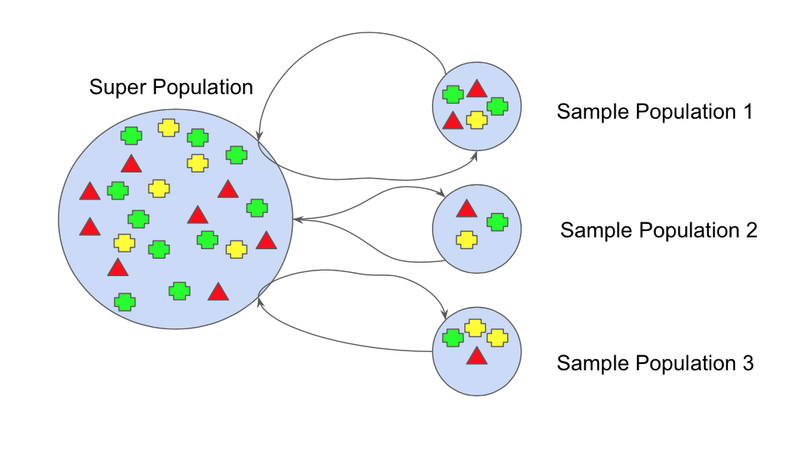

The results of repeated tenfold cross-validation experiments for predicting the QLS and GAF functional outcome of schizophrenia with clinical symptom scales using machine. Bootstrap Aggregation bagging is a ensembling method that. Bagging Predictors LEO BBEIMAN Statistics Department University qf Callbrnia.

Date Abstract Evolutionary learning techniques are comparable in accuracy with other learning. Machine Learning 24 123140 1996 c 1996 Kluwer Academic Publishers Boston. Customer churn prediction was carried out using AdaBoost classification and BP neural.

In this article we report on an approach based on supervised learning to automatically infer users transportation modes including driving walking taking a bus and riding a bike from raw GPS. In bagging a random sample. Learning algorithms that improve their bias dynamically through.

In bagging predictors are constructed using bootstrap samples from the training set and. The process may takea few minutes but once it finishes a file will be downloaded on your browser soplease. Published 1 August 1996.

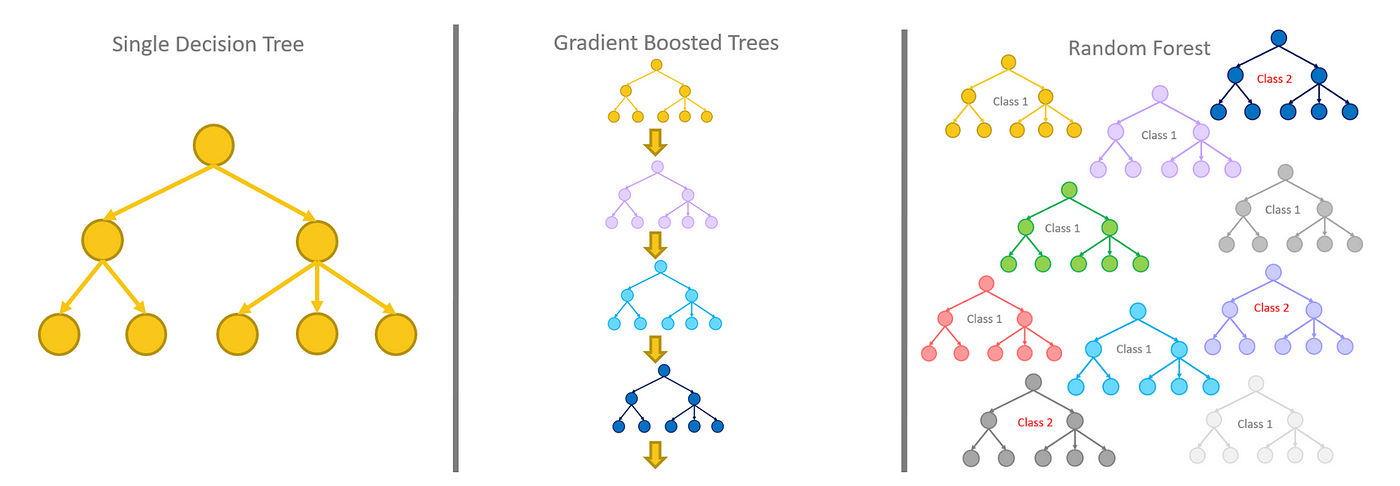

Bootstrap aggregating also called bagging from bootstrap aggregating is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning.

Bagging And Pasting In Machine Learning Life With Data

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

Ensemble Learning Explained Part 1 By Vignesh Madanan Medium

Bagging Machine Learning Through Visuals 1 What Is Bagging Ensemble Learning By Amey Naik Machine Learning Through Visuals Medium

Ensemble Techniques Part 1 Bagging Pasting By Deeksha Singh Geek Culture Medium

Ensemble Techniques Part 1 Bagging Pasting By Deeksha Singh Geek Culture Medium

Random Forest Classification Explained In Detail And Developed In R Datasciencecentral Com

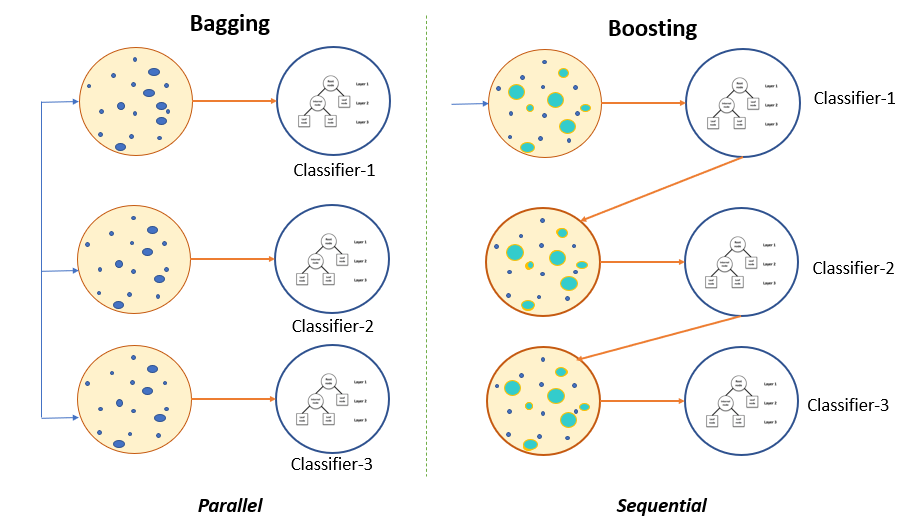

Guide To Ensemble Methods Bagging Vs Boosting

An Introduction To Bagging In Machine Learning Statology

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

Bagging Machine Learning Through Visuals 1 What Is Bagging Ensemble Learning By Amey Naik Machine Learning Through Visuals Medium

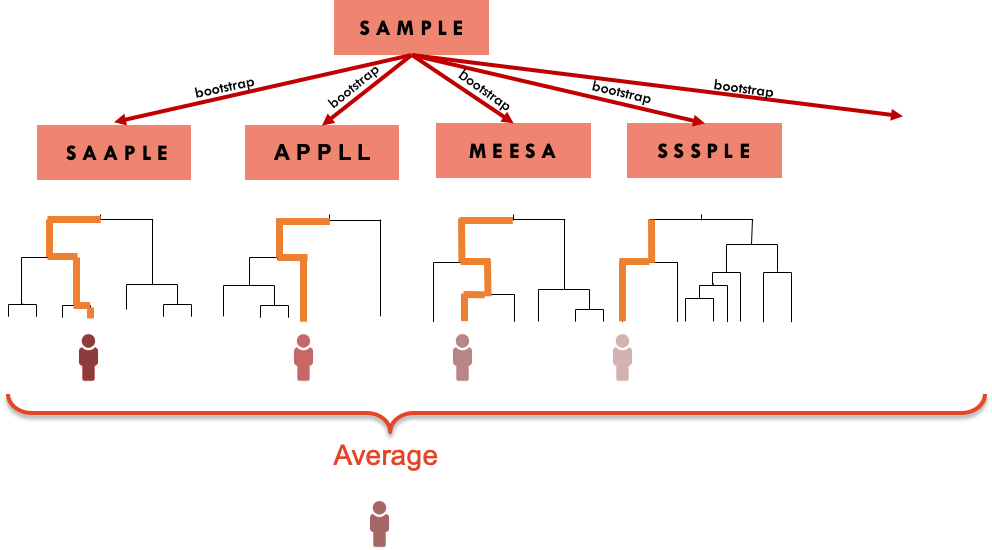

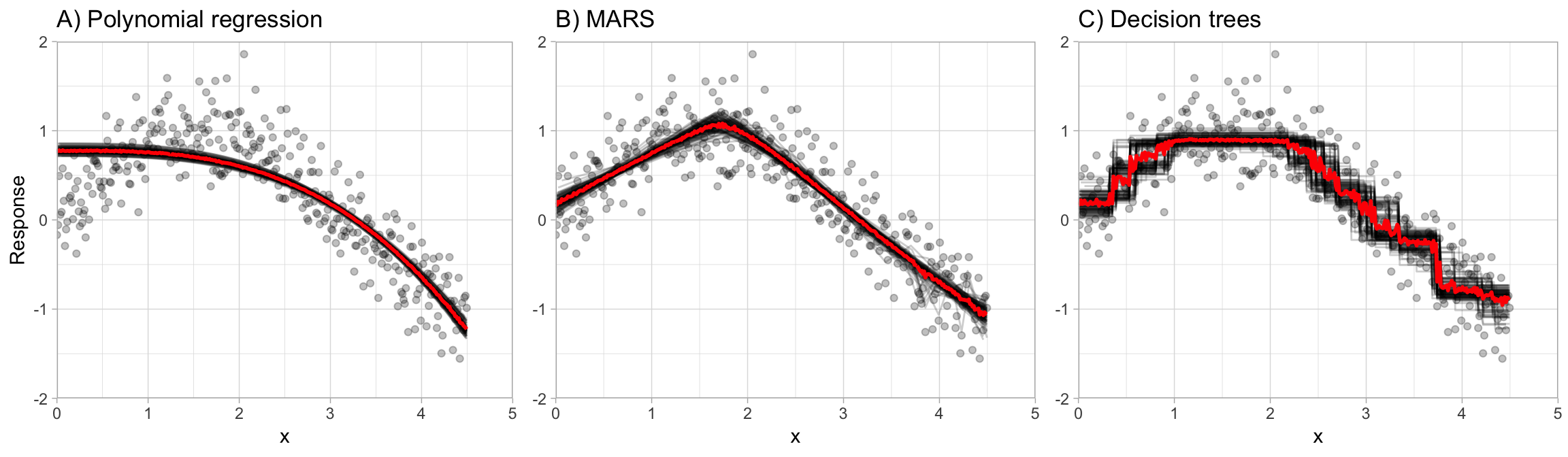

Bootstrap Aggregation Bagging In Regression Tree Ensembles Download Scientific Diagram

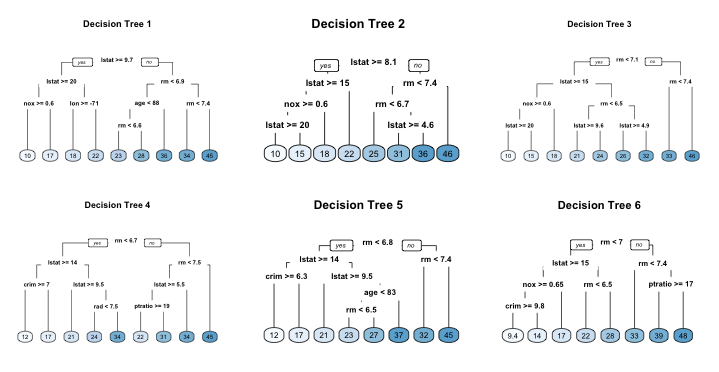

Chapter 10 Bagging Hands On Machine Learning With R

Learn Ensemble Learning Algorithms Machine Learning Jc Chouinard

Ensemble Methods Techniques In Machine Learning Bagging Boosting Random Forest Gbdt Xg Boost Stacking Light Gbm Catboost Analytics Vidhya

Chapter 10 Bagging Hands On Machine Learning With R

Ensemble Models Bagging Boosting By Rosaria Silipo Analytics Vidhya Medium